Artificial Intelligence (AI) has made significant strides in various domains, including software development. AI models can now generate code snippets, suggest improvements, and even assist in automating repetitive tasks.

However, when it comes to debugging identifying and fixing errors in code. AI still has a long way to go.

The Current State of AI in Debugging

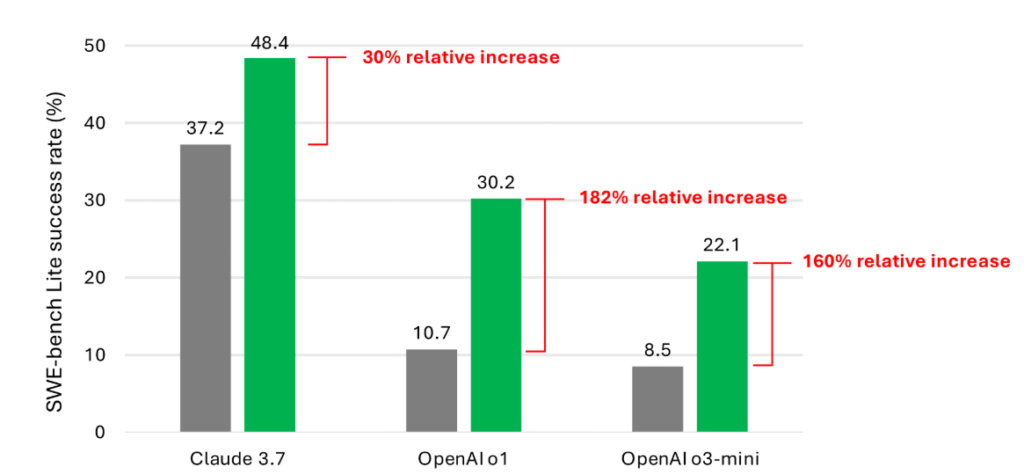

Recent studies have shown that even advanced AI models struggle with debugging tasks. For instance, a Microsoft Research study evaluated several AI models on their ability to fix bugs in a set of 300 software debugging tasks from the SWE-bench Lite benchmark.

The results were telling: the best-performing model, Claude 3.7 Sonnet, achieved a success rate of only 48.4%, while others like OpenAI’s o1 and o3-mini lagged behind at 30.2% and 22.1%, respectively .

These findings highlight a significant gap between AI capabilities and human expertise in debugging. While AI can assist in code generation, understanding the context of errors and applying logical reasoning to fix them remains a challenge.

Introducing Debug-Gym: A Step Towards Human-Like Debugging

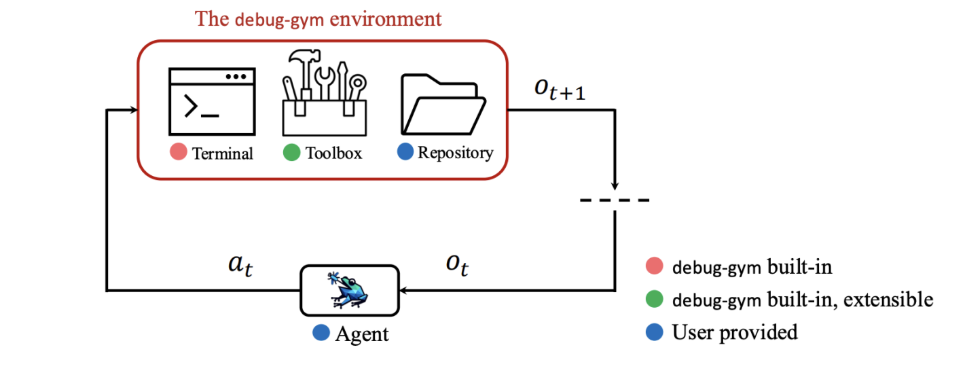

To address these challenges, Microsoft Research has developed Debug-Gym, a novel environment designed to train AI coding tools in the complex art of debugging code .

Debug-Gym provides a text-based interface where AI agents can interactively debug code using tools like Python’s built-in debugger (pdb).

This setup allows AI models to simulate the step-by-step process that human programmers follow when identifying and fixing bugs.

By engaging in interactive debugging sessions, AI agents can learn to make sequential decisions, gather necessary information, and apply logical reasoning to correct errors.

This approach aims to bridge the gap between AI’s current capabilities and the nuanced understanding required for effective debugging.

The Importance of Sequential Decision-Making

One of the key insights from Microsoft’s research is the importance of sequential decision making in debugging.

Human programmers often follow a structured approach: they identify the problem, gather relevant information, test hypotheses, and apply fixes. AI models, however, lack this structured methodology.

Debug-Gym addresses this by providing AI agents with an environment to practice these steps. By simulating real-world debugging scenarios, AI models can learn to navigate the complexities of code correction more effectively.

Challenges and Limitations

Despite these advancements, AI models still face significant challenges in debugging. One major issue is the lack of training data that captures the intricacies of human debugging processes. Without sufficient examples of how programmers approach and resolve bugs, AI models struggle to replicate these behaviors.

Additionally, AI models often have difficulty understanding the broader context of code, which is crucial for effective debugging. They may fix a specific error without recognizing its implications on other parts of the program, leading to further issues.

The Road Ahead: Enhancing AI Debugging Capabilities

To improve AI’s debugging abilities, researchers are exploring several avenues:

- Enhanced Training Data: By incorporating more examples of human debugging processes into training datasets, AI models can learn to mimic these approaches more effectively.

- Interactive Learning Environments: Tools like Debug-Gym provide AI agents with the opportunity to engage in hands-on debugging, fostering a deeper understanding of the process.

- Integration with Development Tools: Embedding AI debugging assistants into popular development environments can facilitate real-time assistance and learning.

- Collaborative Debugging: Combining AI capabilities with human oversight can lead to more efficient and accurate debugging processes.

Debugging remains a significant challenge for AI, with current models showing limited success rates. The step-by-step approach mimics human methods but still falls short. AI’s ability to gather information and apply logical reasoning is promising, yet it lacks nuanced understanding. Training data capturing human debugging processes is crucial for improvement. How can researchers effectively address the lack of sufficient debugging examples for AI models?

AI’s ability to debug code is still quite limited, as evidenced by the low success rates of models like Claude 3.7 Sonnet and OpenAI’s o1 and o3-mini. The focus on sequential decision-making and interactive debugging sessions seems promising, but the lack of quality training data remains a major hurdle. Researchers are actively working on refining these models to better mimic human debugging processes. Could the integration of more real-world debugging examples significantly improve AI performance in this area?

It’s interesting how quickly online gaming tech is evolving! Seeing platforms like jboss focus on streamlined registration & smart recommendations is a big step up. Definitely makes the experience smoother – and safer!